Understanding the way search engines process queries lets us know what adjustments are necessary to make websites more visible in the SERPs. The process of search engine optimization becomes more complex as the queries search engines learn to interpret become more advanced. In a recent interview, Google Research Fellow Jeff Dean gives us insight into the development of conversational search, the Knowledge Graph, and the future of the search engine giant.

Improving Features by Interpreting Unlabeled Data

Google’s Systems Infrastructure Group is working on making the knowledge graph more self-sufficient by developing unsupervised learning as much as they develop supervised learning. Mixing these two and applying them to machine learning, according to Dean, will help improve the performance of their search engine.

“In almost all cases you don’t have as much labeled data as you’d really like. And being able to take advantage of the unlabeled data would probably improve our performance by an order of magnitude on the metrics we care about,” Dean said. The direction they are taking with machine learning is one of neural processing – they want the search engine to be able to store and process automatically information as new searches emerge, even while they are updating their database and adding new information manually.

Breaking Down Queries into Manageable Data

In relation to the Knowledge Graph, Dean also discussed how they were developing conversational search to answer bigger and more complex queries. Google started moving towards this direction in 2008 with their “Previous Query” feature. As their machine understanding became more advanced, they moved from simply adding words to previous queries to create new, relevant ones, to actually understanding the context and being able to answer as though you were holding a conversation with a person. This happened when they rolled out conversational search last May.

“[We] kind of have baby steps in this area. You can ask Google, ‘Who’s the president of the United States of America?’ And it will come back with ‘Barack Obama.’ Then if you ask, ‘Who’s he married to?’ It will remember that ‘he’ in this instance means ‘Barack Obama’ and it knows what married means because we have this curated knowledge graph of facts about the world, and it will infer what sort of result you’re looking for and will come back with ‘Michelle Obama.’”

To illustrate how it works, here’s the test run Danny Sullivan ran and posted on Search Engine Land when conversational search was first rolled out:

First, he spoke through his microphone to do a voice search.

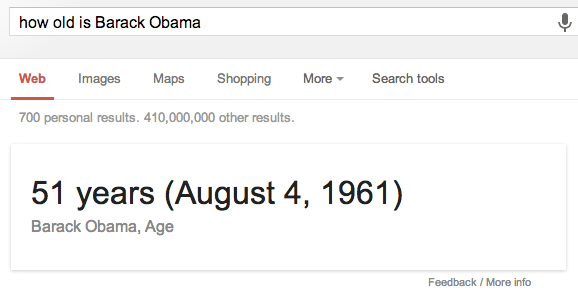

He then got a spoken answer, along with a result card:

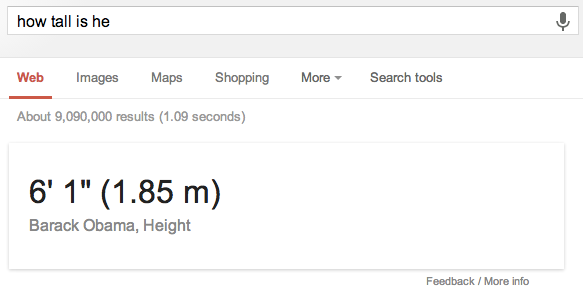

To follow up, he asked another question, but replaced ‘Barack Obama’ with the pronoun ‘he.’ The answer still referred to Obama:

What Google’s research team is working on right now is to take this basic concept and make it applicable for searches that are more complex. The example that Dean gave, “Please book me a trip to Washington DC,” leads to follow-up questions that Google will need to address if conversational search is to reach this advanced, virtual assistant level:

That’s a very high-level set of instructions. And if you’re a human, you’d ask me a bunch of follow-up questions, ‘What hotel do you want to stay at?’ ‘Do you mind a layover?’ – that sort of thing. I don’t think we have a good idea of how to break it down into a set of follow-up questions to make a manageable process for a computer to solve that problem. The search team often talks about this as the ‘conversational search problem.’

Where Search Will Be in Five Years

Google will continue moving towards understanding text more. According to Dean, at this point, we’re still receiving results based on exact and partial keyword matching, which is a crude version of what they want to happen with conversational search.

“[What] we’d like to get to [is] where we have higher level understanding than just words. If we could get to the point where we understand sentences, that will be really quite powerful,” he explains. He then moves on to give examples illustrating the level of complexity they want to address in search:

“It might be possible to build user interfaces that read the things people read and do the things people do. You could ask hard questions like, ‘What are some of the lesser known causes of the Civil War?’ Or queries where you have to join together data from lots of different sources. Like ‘What’s the Google engineering office with the highest average temperature?’ There’s no webpage that has that data on it. But if you know a page that has all the Google offices on it, and you know how to find historical temperature data, you can answer that question. But making the leap to being able to manipulate that data to answer the question depends fundamentally on actually understanding what the data is.”

This direction is consistent with Google’s policies prioritizing quality content and penalizing spam. Having Google understand more complicated and abstract sentence queries means you won’t have to focus as much on keyword optimization and other technical SEO aspects, but on the content relevance and quality.

More importantly, search engines will become more than just information repositories that you can search with keywords. They become fully functional virtual assistants with skills in language and syntax comprehension. Google’s future function will not only be to understand queries, but to ask questions to follow up with the query in order to deliver the best information sources possible.

We’d be happy to discuss further how Google works today, and what direction it’s headed towards. Talk to your account manager today to learn more. Sign up today to become a partner and receive the benefits of our SEO affiliate program today.

![Organic Traffic Increased by 300% in 6 Months [SEO Case Study]](https://www.seoreseller.com/wp-content/uploads/2020/03/Increase-Traffic-with-Organic-SEO-Case-Study-1-768x263.jpg)